Platform for malicious clients detection in federated learning

Dominik Kolasa

supervisor: Maria Ganzha

Federated learning is a process where many clients contribute to the global model. In this scenario I found that some of the clients may intentionally or by a mistake provide wrong updates to the global model. I address this issue to filter such contributions. I’ve developed a new method where no testing data is required. The research shows that the method works for different setups, filters out malicious clients accurately when compared with other methods and does not require any data to run. I developed a model using multiple protection strategies - testing, weights comparison method and rating. Currently I am working on an implementation of a federated learning platform with malicious clients protection enabled. The platform gives the ability to test malicious protection against different datasets.

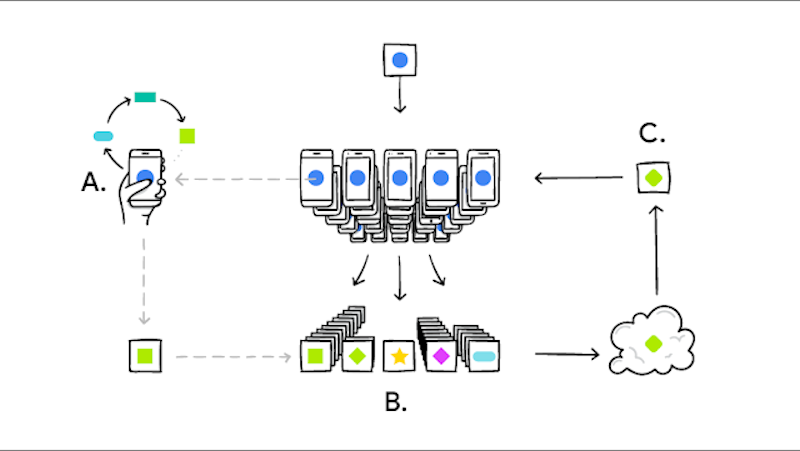

Fig. 1 Federated learning with mobile phones. https://ai.googleblog.com/2017/04/federated-learning-collaborative.html

Federated learning is a new way of distributed machine learning for multiple clients. It can be used to train models using mo-bile phones, IOT devices and any device which has the power to compute a training round. All of them collaborate to build a global model. In my research I am trying to resolve problems with malicious updates which may affect federated learning process and cause the global model to predict even randomly.